Portainer CE by default doesnt support Entra ID (formerly Azure AD) for SSO.

Mostly because it’s for non-commercial use, but I actually have a private Microsoft 365 tenant for myself, so I wanted to use Entra ID Authentication for that.

With this guide, I will tell you how you can use custom oAuth to configure Entra ID sign in, since it wasn’t a breeze to find out myself.

Why Use Entra ID with Portainer CE?

- Single Sign-On (SSO): Use Entra ID credentials to log in to Portainer.

- Enhanced Security: Enforce policies such as multi-factor authentication (MFA) via Entra ID.

- Simplified User Management: Centralize access control through your existing Entra ID setup.

Prerequisites

- A running instance of Portainer CE (version 2.9 or later).

- An Entra ID tenant (part of a Microsoft 365 or Azure subscription).

- Administrative privileges on both Entra ID and Portainer CE.

Step 1: Register an App in Entra ID

- Log in to Entra ID Portal:

- Visit the Entra ID Portal.

- Create a New App Registration:

- Go to Azure Active Directory > App Registrations > + New Registration.

- Provide a name for the app (e.g.,

Portainer OAuth). - Set Supported Account Types:

- Single Tenant (if only your organization will use Portainer).

- Add a Redirect URI:

- Type: Web

- URI:

https://<your-portainer-url> - Replace

<your-portainer-url>with your Portainer CE domain or IP address. (HTTPS is required for SSO)

- Click Register.

- Save the Key Details:

- After registration, copy:

- Application (client) ID

- Directory (tenant) ID

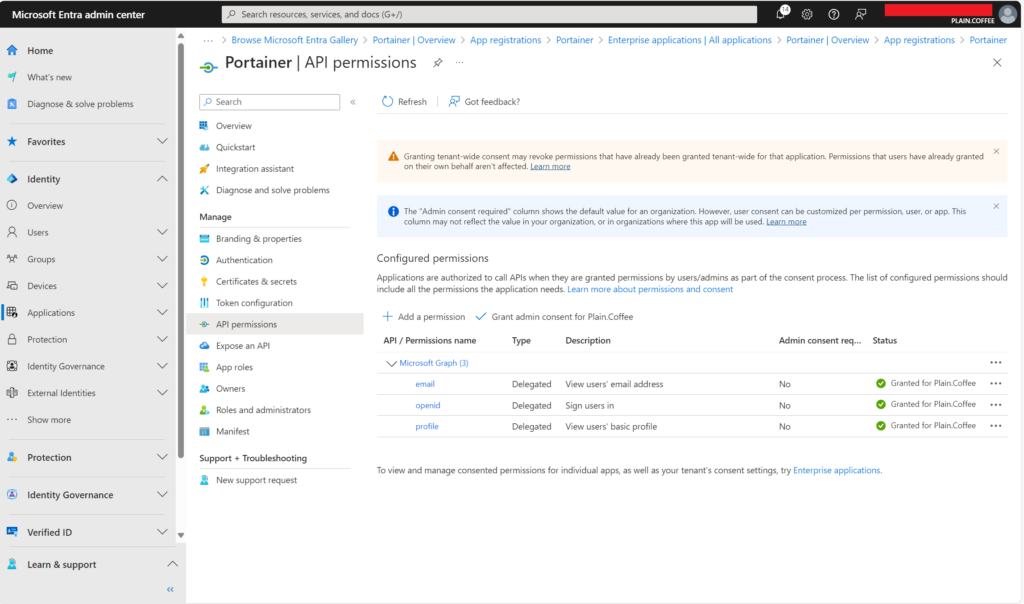

Step 2: Configure Permissions in Entra ID

- Add API Permissions:

- Go to API Permissions > + Add a permission.

- Select Microsoft Graph > Delegated Permissions.

- Add:

openidprofileemail

- Click Grant admin consent to apply permissions for all users.

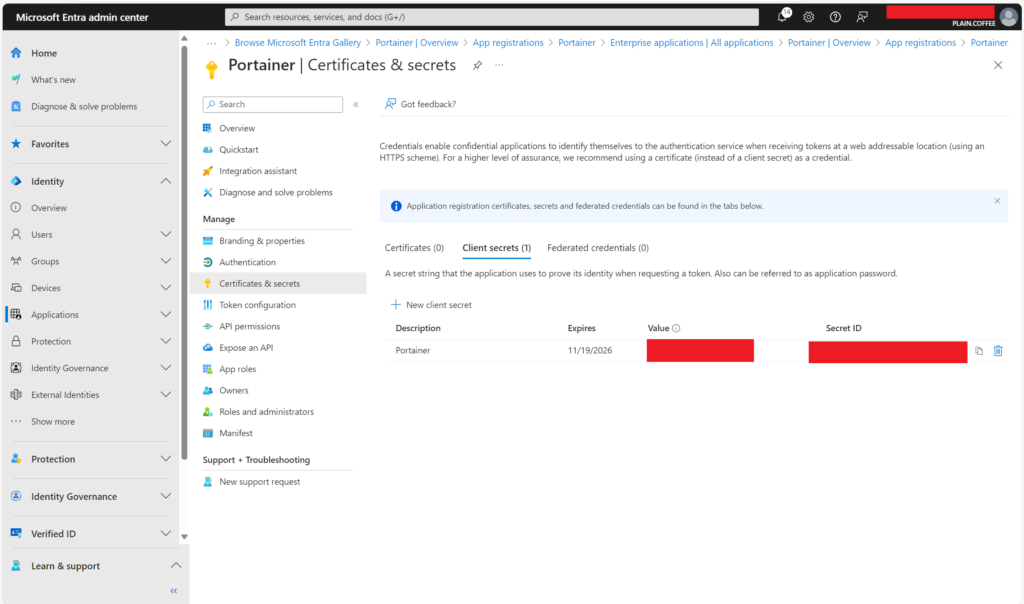

- Create a Client Secret:

- Go to Certificates & Secrets > + New Client Secret.

- Add a description (e.g.,

Portainer OAuth). - Set an expiration period (e.g., 12 months).

- Save the Client Secret value. You’ll need it for Portainer.

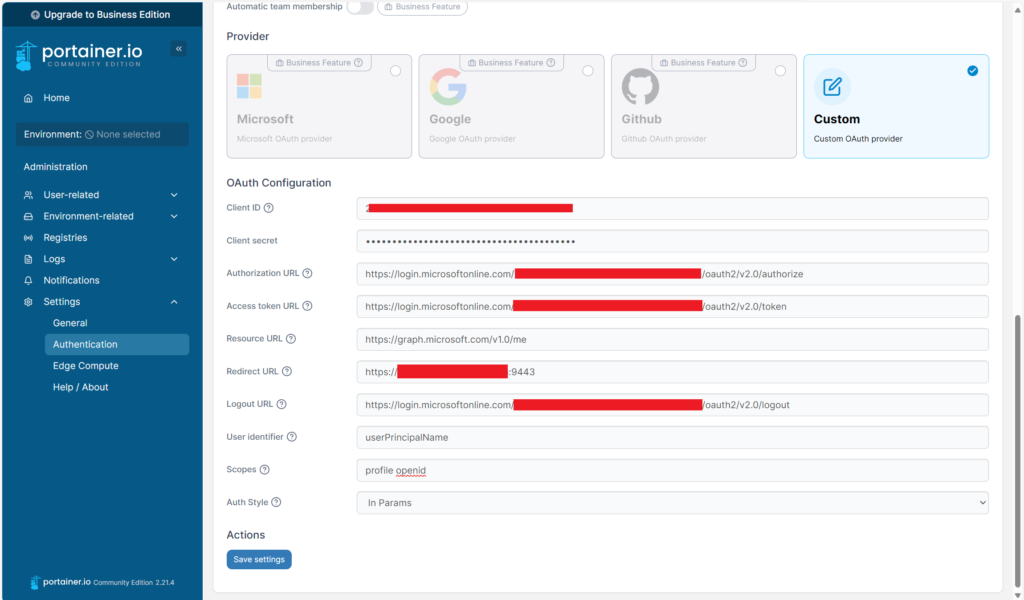

Step 3: Configure Custom OAuth in Portainer CE

- Log in to Portainer:

Access your Portainer CE instance as an administrator. - Navigate to Authentication Settings:

- Go to Settings > Authentication.

- Select the Custom OAuth provider.

- Enter the Entra ID OAuth Details:

Use the following settings based on your configuration:

- Client ID:

<Your Application (client) ID> - Client Secret:

<Your Client Secret> - Authorization URL:

https://login.microsoftonline.com/<Your-Tenant-ID>/oauth2/v2.0/authorize - Access Token URL:

https://login.microsoftonline.com/<Your-Tenant-ID>/oauth2/v2.0/token - Resource URL:

https://graph.microsoft.com/v1.0/me - Redirect URL:

https://<your-portainer-url> - Logout URL:

https://login.microsoftonline.com/<Your-Tenant-ID>/oauth2/v2.0/logout - User Identifier:

userPrincipalName - Scopes:

openid profile - Auth Styles: in params

Step 4: Test the Integration

- Log out of Portainer and access the login page.

- You should see the OAuth login option.

- Authenticate using your Entra ID credentials.

- If successful, you will be redirected to Portainer’s dashboard, don’t forget to give the account permissions, because you can’t add it automatically to a team with the community edition of Portainer!

Common Issues and Troubleshooting

- Unauthorized Error:

- Ensure that In Params is the Auth Style

- Redirect URI Mismatch:

- Ensure the Redirect URI in Portainer matches exactly with what is configured in Entra ID, no oauth/callback as stated by some guides.

- Missing Claims:

- Add optional claims in Entra ID:

- Go to Token Configuration > + Add optional claim.

- Add the following claims for the ID token:

emailnameupn(User Principal Name).

- Token Validation Errors:

- Ensure

openid,profile, andemailscopes are properly configured and granted admin consent.

Conclusion

Integrating Portainer CE with Entra ID provides a secure and centralized authentication solution for your containerized environments. By leveraging OAuth, you can streamline user access, enforce MFA, and manage access control directly from Entra ID.